For an matrix

with nonsingular block

the Schur complement is

. It is denoted by

. The block with respect to which the Schur complement is taken need not be the

block. Assuming that

is nonsingular, the Schur complement of

in

is

.

More generally, for an index vector , where

, the Schur complement of

in

is

, where

is the complement of

(the vector of indices not in

). Most often, it is the Schur complement of

in

that is of interest, and the general case can be reduced to it by row and column permutations that move

to the

block of

.

Schur Complements in Gaussian Elimination

The reduced submatrices in Gaussian elimination are Schur complements. Write an matrix

with nonzero

element

as

This factorization represents the first step of Gaussian elimination.

After stages of Gaussian elimination we have computed the following factorization, in which the

blocks are

:

where the first matrix on the left is the product of the elementary transformations that reduce the first columns to upper triangular form. Equating

and

blocks in this equation gives

and

. Hence

, which is the Schur complement of

in

.

The next step of the elimination, which zeros out the first column of below the diagonal, succeeds as long as the

) element of

is nonzero (or if the whole first column of

is zero, in which case there is nothing to do, and

is singular in this case).

For a number of matrix structures, such as

- Hermitian (or real symmetric) positive definite matrices,

- totally positive matrices,

- matrices diagonally dominant by rows or columns,

-matrices,

one can show that the Schur complement inherits the structure. For these four structures the element of the matrix is nonzero, so the success of Gaussian elimination (or equivalently, the existence of an LU factorization) is guaranteed. In the first three cases the preservation of structure is also the basis for a proof of the numerical stability of Gaussian elimination.

Inverse of a Block Matrix

The Schur complement arises in formulas for the inverse of a block matrix. If

is nonsingular, we can write

If is nonsingular then inverting gives

So is the

block of

.

One can obtain an analogous formula for in terms of the Schur complement of

in

, in which the inverse of the Schur complement is the

block of

. More generally, we have

.

Test for Positive Definiteness

For Hermitian matrices the Schur complement provides a test for positive definiteness. Suppose

with positive definite. The factorization

shows that is congruent to the block diagonal matrix

, and since congruences preserve inertia (the number of positive, zero, and negative eigenvalues),

is positive definite if and only if

is positive definite, that is, if and only if

is positive definite. The same equivalence holds with “definite” replace by “semidefinite” (with

still required to be positive definite).

Generalized Schur Complement

For in (1) with a possibly singular

block

we can define the generalized Schur complement

, where “+” denotes the Moore-Penrose pseudo-inverse. The generalized Schur complement is useful in the context of Hermitian positive semidefinite matrices, as the following result shows.

Theorem 1.

If

is Hermitian then

is positive semidefinite if and only if

and

and the generalized Schur complement

are both positive semidefinite.

If is positive definite then the range condition is trivially satisfied.

Notes and References

The term “Schur complement” was coined by Haynsworth in 1968, thereby focusing attention on this important form of matrix and spurring many subsequent papers that explore its properties and applications. The name “Schur” was chosen because a 1917 determinant lemma of Schur says that if is nonsingular then

which is obtained by taking determinants in (2).

For much more about the Schur complement see Zhang (2005) or Horn and Johnson (2013).

- Emilie V. Haynsworth, Determination of the Inertia of a Partitioned Matrix, Linear Algebra Appl. 1(1), 73–81, 1968.

- Roger A. Horn and Charles R. Johnson, Matrix Analysis, second edition, Cambridge University Press, 2013. My review of the second edition.

- Fuzhen Zhang, editor, The Schur Complement and Its Applications. Springer-Verlag, New York, 2005, xvi+295 pp.

Related Blog Posts

- What Is a Block Matrix? (2020)

- What is a Diagonally Dominant Matrix? (2021)

- What Is a Symmetric Positive Definite Matrix? (2020)

- What Is a Totally Nonnegative Matrix? (2021)

- What Is an LU Factorization? (2021)

- What Is an M-Matrix? (2021)

- What Is the Inertia of a Matrix? (2022)

- What Is the Matrix Inverse? (2022)

This article is part of the “What Is” series, available from https://nhigham.com/category/what-is and in PDF form from the GitHub repository https://github.com/higham/what-is.

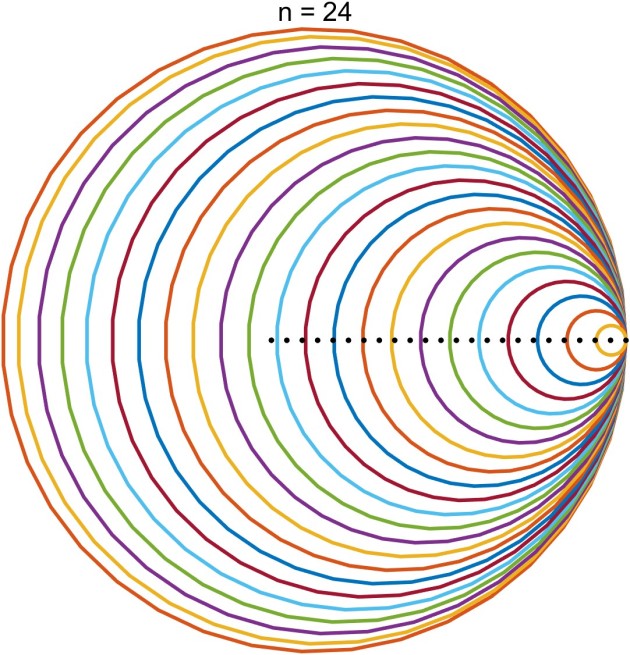

Here are two other matrices whose Gershgorin discs make a graphically interesting plot.

Here are two other matrices whose Gershgorin discs make a graphically interesting plot.