An eigenvalue of a square matrix is a scalar

such that

for some nonzero vector

. The vector

is an eigenvector of

and it has the distinction of being a direction that is not changed on multiplication by

.

An matrix has

eigenvalues. This can be seen by noting that

is equivalent to

, which means that

is singular, since

. Hence

. But

is a scalar polynomial of degree (the characteristic polynomial of

) with nonzero leading coefficient and so has

roots, which are the eigenvalues of

. Since

, the eigenvalues of

are the same as those of

.

A real matrix may have complex eigenvalues, but they appear in complex conjugate pairs. Indeed implies

, so if

is real then

is an eigenvalue of

with eigenvector

.

Here are some matrices and their eigenvalues.

Note that and

are upper triangular, that is,

for

. For such a matrix the eigenvalues are the diagonal elements.

A symmetric matrix () or Hermitian matrix (

, where

) has real eigenvalues. A proof is

so premultiplying the first equation by

and postmultiplying the second by

gives

and

, which means that

, or

since

. The matrix

above is symmetric.

A skew-symmetric matrix () or skew-Hermitian complex matrix (

) has pure imaginary eigenvalues. A proof is similar to the Hermitian case:

and so

is equal to both

and

, so

. The matrix

above is skew-symmetric.

In general, the eigenvalues of a matrix can lie anywhere in the complex plane, subject to restrictions based on matrix structure such as symmetry or skew-symmetry, but they are restricted to the disc centered at the origin with radius

, because for any matrix norm

it can be shown that every eigenvalue satisfies

.

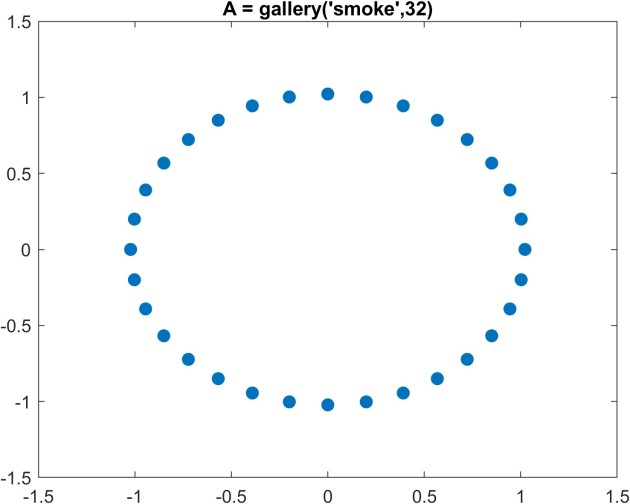

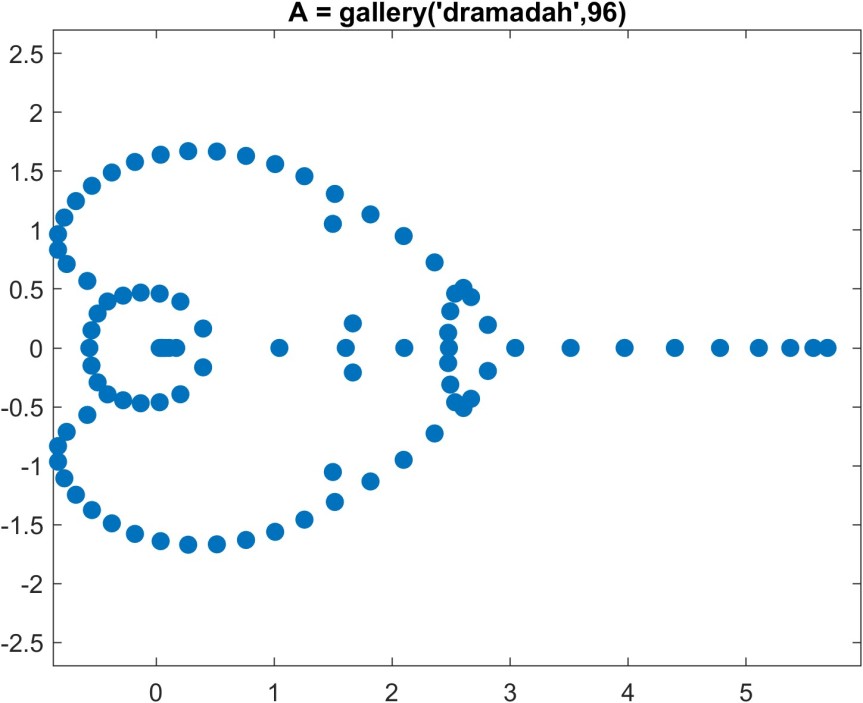

Here are some example eigenvalue distributions, computed in MATLAB. (The eigenvalues are computed at high precision using the Advanpix Multiprecision Computing Toolbox in order to ensure that rounding errors do not affect the plots.) The second and third matrices are real, so the eigenvalues are symmetrically distributed about the real axis. (The first matrix is complex.)

Although this article is about eigenvalues we need to say a little more about eigenvectors. An matrix

with distinct eigenvalues has

linearly independent eigenvectors. Indeed it is diagonalizable:

for some nonsingular matrix

with

the matrix of eigenvalues. If we write

in terms of its columns as

then

is equivalent to

,

, so the

are eigenvectors of

. The matrices

and

above both have two linearly independent eigenvectors.

If there are repeated eigenvalues there can be less than linearly independent eigenvectors. The matrix

above has only one eigenvector: the vector

(or any nonzero scalar multiple of it). This matrix is a Jordan block. The matrix

shows that a matrix with repeated eigenvalues can have linearly independent eigenvectors.

Here are some questions about eigenvalues.

- What matrix decompositions reveal eigenvalues? The answer is the Jordan canonical form and the Schur decomposition. The Jordan canonical form shows how many linearly independent eigenvectors are associated with each eigenvalue.

- Can we obtain better bounds on where eigenvalues lie in the complex plane? Many results are available, of which the most well-known is Gershgorin’s theorem.

- How can we compute eigenvalues? Various methods are available. The QR algorithm is widely used and is applicable to all types of eigenvalue problems.

Finally, we note that the concept of eigenvalue is more general than just for matrices: it extends to nonlinear operators on finite or infinite dimensional spaces.

References

Many books include treatments of eigenvalues of matrices. We give just three examples.

- Gene Golub and Charles F. Van Loan, Matrix Computations, fourth edition, Johns Hopkins University Press, Baltimore, MD, USA, 2013.

- Roger A. Horn and Charles R. Johnson, Matrix Analysis, second edition, Cambridge University Press, 2013. My review of the second edition.

- Carl D. Meyer, Matrix Analysis and Applied Linear Algebra, Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 2000.

Related Blog Posts

This article is part of the “What Is” series, available from https://nhigham.com/category/what-is and in PDF form from the GitHub repository https://github.com/higham/what-is.