For a given matrix, Gershgorin’s theorem defines

discs in the complex plane whose union contains the eigenvalues of the matrix. The theorem can provide approximations to eigenvalues. It can also provide qualitative information, such as that all the eigenvalues lie in a particular half-plane.

Theorem 1 (Gershgorin’s theorem).

The eigenvalues of

lie in the union of the

discs in the complex plane

Proof. Let

be an eigenvalue of

and

a corresponding eigenvector and let

. From the

th equation in

we have

Hence

and since

it follows that

belongs to the

th disc,

.

The Gershgorin discs are defined in terms of a summation over the rows of

, but since the eigenvalues of

are the same as those of

the same result holds with summation over the columns.

A consequence of the theorem is that if does not belong to any of the Gershgorin discs then

is nonsingular. This is equivalent to the well-known result that a strictly diagonally dominant matrix is nonsingular.

Another consequence of the theorem is that if is symmetric and all the discs lie in the open right half-plane then the eigenvalues are positive and so

is positive definite. This condition is equivalent to

having positive diagonal elements and being strictly diagonally dominant.

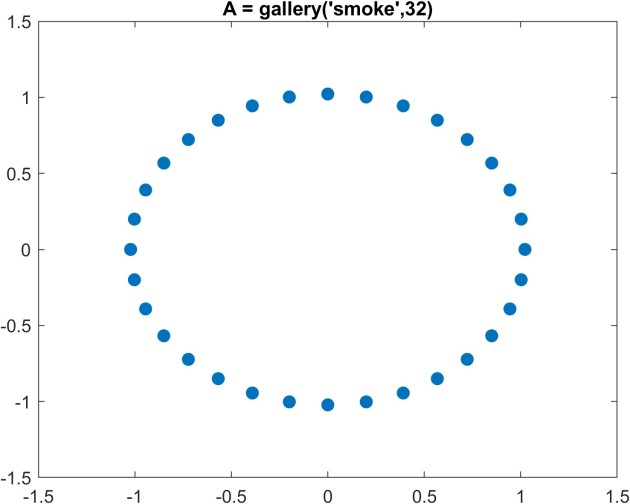

The Gershgorin discs for the matrix

are shown here:

The eigenvalues—three real and one complex conjugate pair—are the black dots. It happens that each disc contains an eigenvalue, but this is not always the case. For the matrix

The eigenvalues—three real and one complex conjugate pair—are the black dots. It happens that each disc contains an eigenvalue, but this is not always the case. For the matrix

the discs are

and the blue disc does not contain an eigenvalue. The next result, which is proved by a continuity argument, provides additional information that increases the utility of Gershgorin’s theorem. In particular it says that if a disc is disjoint from the other discs then it contains an eigenvalue.

and the blue disc does not contain an eigenvalue. The next result, which is proved by a continuity argument, provides additional information that increases the utility of Gershgorin’s theorem. In particular it says that if a disc is disjoint from the other discs then it contains an eigenvalue.

Theorem 2.

If

of the Gershgorin discs of

are disjoint from the other discs then their union contains

eigenvalues of

.

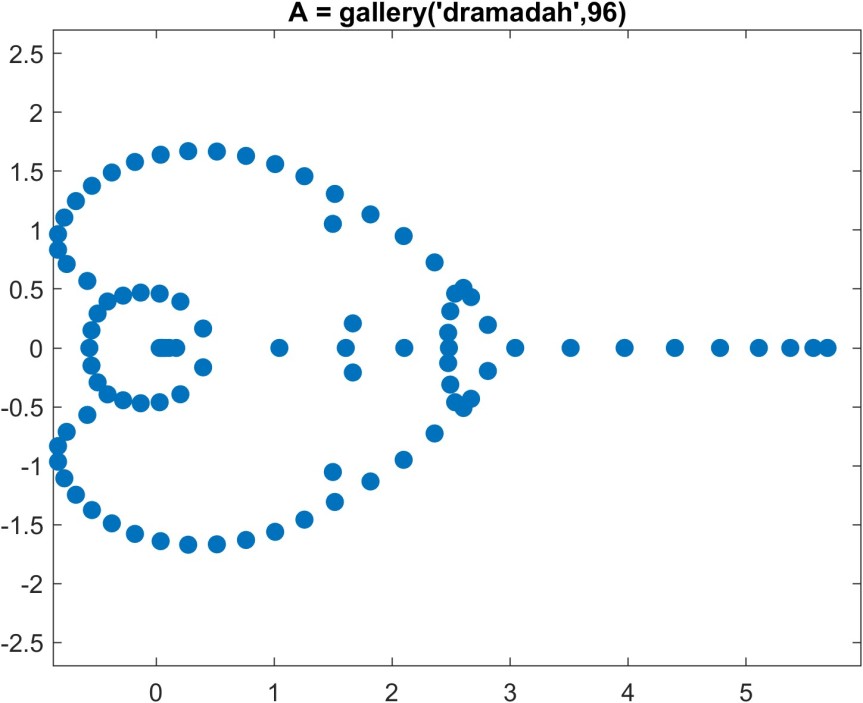

Theorem 2 tells us that the rightmost disc in our example contains one eigenvalue,

, since it is disjoint from the other discs, and the union of the other four discs contains four eigenvalues. Furthermore,

must be real, because if not it occurs in a complex conjugate pair since the matrix is real, and as the disc is symmetric about the real axis

would also lie in the disc, contradicting Theorem 2.

Gershgorin’s theorem is most useful for matrices that are close to being diagonal. A technique that can produce improved eigenvalue estimates is to apply the theorem to for some nonsingular diagonal matrix

. This similarity transformation does not change the eigenvalues but it does change the discs, and the aim is to choose

to reduce the radiuses of the discs. Consider our

example. We know that there is one eigenvalue

in the rightmost disc and that it is real, so

. For

the rightmost disc shrinks and remains distinct from the others and we obtain the sharper bounds

. The discs for

are shown here:

Notes

Most books on matrix analysis or numerical linear algebra include Gershgorin’s theorem.

Eigenvalue inclusion regions have been developed with discs replaced by more complicated shapes, such as Brauer’s ovals of Cassini.

Varga’s 2004 book is devoted to Gershgorin’s theorem and related results. It reproduces Gershgorin’s 1931 paper in an appendix.

References

- S. Gerschgorin. Uber die Abgrenzung der Eigenwerte einer Matrix. Izv. Akad. Nauk. SSSR, 1:749–754, 1931.

- Richard S. Varga. Geršgorin and His Circles. Springer-Verlag, Berlin, Germany, 2004.

Related Blog Posts

- What Is a Diagonally Dominant Matrix? (2021)

- What Is an Eigenvalue? (2022)

This article is part of the “What Is” series, available from https://nhigham.com/category/what-is and in PDF form from the GitHub repository https://github.com/higham/what-is.