The matrix sign function is the matrix function corresponding to the scalar function of a complex variable

Note that this function is undefined on the imaginary axis. The matrix sign function can be obtained from the Jordan canonical form definition of a matrix function: if is a Jordan decomposition with

then

since all the derivatives of the sign function are zero. The eigenvalues of are therefore all

. Moreover,

, so

is an involutory matrix.

The matrix sign function was introduced by Roberts in 1971 as a tool for model reduction and for solving Lyapunov and algebraic Riccati equations. The fundamental property that Roberts employed is that and

are projectors onto the invariant subspaces associated with the eigenvalues of

in the open right-half plane and open left-half plane, respectively. Indeed without loss of generality we can assume that the eigenvalues of

are ordered so that

, with the eigenvalues of

in the open left half-plane and those of

in the open right half-plane (

). Then

and, writing , where

is

and

is

, we have

Also worth noting are the integral representation

and the concise formula

Application to Sylvester Equation

To see how the matrix sign function can be used, consider the Sylvester equation

This equation is the block of the equation

If and

then

so the solution can be read from the

block of the sign of the block upper triangular matrix

. The conditions that

and

are identity matrices are satisfied for the Lyapunov equation

when

is positive stable, that is, when the eigenvalues of

lie in the open right half-plane.

A generalization of this argument shows that the matrix sign function can be used to solve the algebraic Riccati equation , where

and

are Hermitian.

Application to the Eigenvalue Problem

It is easy to see that satisfies

, where

and

are the number of eigenvalues in the open left-half plane and open right-half plane, respectively (as above). Since

, we have the formulas

More generally, for real and

with

,

is the number of eigenvalues lying in the vertical strip . Formulas also exist to count the number of eigenvalues in rectangles and more complicated regions.

Computing the Matrix Sign Function

What makes the matrix sign function so interesting and useful is that it can be computed directly without first computing eigenvalues or eigenvectore of . Roberts noted that the iteration

converges quadratically to . This iteration is Newton’s method applied to the equation

, with starting matrix

. It is one of the rare circumstances in which explicitly inverting matrices is justified!

Various other iterations are available for computing . A matrix multiplication-based iteration is the Newton–Schulz iteration

This iteration is quadratically convergent if for some subordinate matrix norm. The Newton–Schulz iteration is the

member of a Padé family of rational iterations

where is the

Padé approximant to

(

and

are polynomials of degrees at most

and

, respectively). The iteration is globally convergent to

for

and

, and for

it converges when

, with order of convergence

in all cases.

Although the rate of convergence of these iterations is at least quadratic, and hence asymptotically fast, it can be slow initially. Indeed for , if

then the Newton iteration computes

, and so the early iterations make slow progress towards

. Fortunately, it is possible to speed up convergence with the use of scale parameters. The Newton iteration can be replaced by

with, for example,

This parameter can be computed at no extra cost.

As an example, we took A = gallery('lotkin',4), which has eigenvalues ,

,

, and

to four significant figures. After six iterations of the unscaled Newton iteration

had an eigenvalue

, showing that

is far from

, which has eigenvalues

. Yet when scaled by

(using the

-norm), after six iterations all the eigenvalues of

were within distance

of

, and the iteration had converged to within this tolerance.

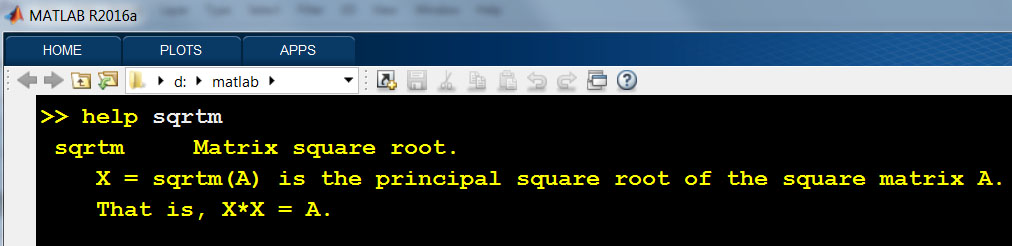

The Matrix Computation Toolbox contains a MATLAB function signm that computes the matrix sign function. It computes a Schur decomposition then obtains the sign of the triangular Schur factor by a finite recurrence. This function is too expensive for use in applications, but is reliable and is useful for experimentation.

Relation to Matrix Square Root and Polar Decomposition

The matrix sign function is closely connected with the matrix square root and the polar decomposition. This can be seen through the relations

for with no eigenvalues on the nonpositive real axis, and

for nonsingular , where

is a polar decomposition. Among other things, these relations yield iterations for

and

by applying the iterations above to the relevant block

matrix and reading off the (1,2) block.

References

This is a minimal set of references, which contain further useful references within.

- Nicholas J. Higham, Functions of Matrices: Theory and Computation, Society for Industrial and Applied Mathematics, Philadelphia, PA, USA, 2008. (Chapter 5.)

- Charles Kenney and Alan Laub, Rational Iterative Methods for the Matrix Sign Function, SIAM J. Matrix Anal. Appl. 12(2), 273–291, 1991.

- J. D. Roberts, Linear Model Reduction and Solution of the Algebraic Riccati Equation by Use of the Sign Function, Internat. J. Control 32, 677–687, 1980.

Related Blog Posts

- What Is a Matrix Function? (2020)

- What Is a Matrix Square Root? (2020)

- What Is the Polar Decomposition? (2020)

This article is part of the “What Is” series, available from https://nhigham.com/category/what-is and in PDF form from the GitHub repository https://github.com/higham/what-is.