A matrix is a rectangular array of numbers on which certain algebraic operations are defined. Matrices provide a convenient way of encapsulating many numbers in a single object and manipulating those numbers in useful ways.

An matrix has

rows and

columns and

and

are called the dimensions of the matrix. A matrix is square if it has the same number of rows and columns, otherwise it is rectangular.

An example of a square matrix is

This matrix is symmetric: for all

and

, where

denotes the entry at the intersection of row

and column

. Matrices are written either with square brackets, as in this example, or round brackets (parentheses).

Addition of matrices of the same dimensions is defined in the obvious way: by adding the corresponding entries.

Multiplication of matrices requires the inner dimensions to match. The product of an matrix

and an

matrix

is an

matrix

defined by the formula

When , both

and

are defined, but they are generally unequal: matrix multiplication is not commutative.

The inverse of a square matrix is a matrix

such that

, where

is the identity matrix, which has ones on the main diagonal (that is, in the

position for all

) and zeros off the diagonal. For rectangular matrices various notions of generalized inverse exist.

The transpose of an matrix

, written

, is the

matrix whose

entry is

. For a complex matrix, the conjugate transpose, written

or

, has

entry

.

In linear algebra, a matrix represents a linear transformation between two vector spaces in terms of particular bases for each space.

Vectors and scalars are special cases of matrices: column vectors are , row vectors are

, and scalars are

.

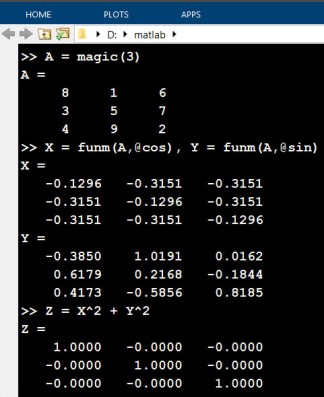

Many programming languages and problem solving environments support arrays. It is important to note that operations on arrays are typically defined componentwise, so that, for example, multiplying two arrays multiplies the corresponding pairs of entries, which is not the same as matrix multiplication. The quintessential programming environment for matrices is MATLAB, in which a matrix is the core data type.

It is possible to give meaning to a matrix with one or both dimensions zero. MATLAB supports such empty matrices. Matrix multiplication generalizes in a natural way to allow empty dimensions:

>> A = zeros(0,2)*zeros(2,3) A = 0x3 empty double matrix >> A = zeros(2,0)*zeros(0,3) A = 0 0 0 0 0 0

In linear algebra and numerical analysis, matrices are usually written with a capital letter and vectors with a lower case letter. In some contexts matrices are distinguished by boldface.

The term matrix was coined by James Joseph Sylvester in 1850. Arthur Cayley was the first to define matrix algebra, in 1858.

References

- Arthur Cayley, A Memoir on the Theory of Matrices, Philos. Trans. Roy. Soc. London 148, 17–37, 1858.

- Nicholas J. Higham, Sylvester’s Influence on Applied Mathematics, Mathematics Today 50, 202–206, 2014. A version of the article with an extended bibliography containing additional historical references is available as a MIMS EPrint.

- Roger A. Horn and Charles R. Johnson, Matrix Analysis, second edition, Cambridge University Press, 2013. My review of the second edition.

Related Blog Posts

- Empty Matrices in MATLAB (2016).

This article is part of the “What Is” series, available from https://nhigham.com/category/what-is and in PDF form from the GitHub repository https://github.com/higham/what-is.