The CS (cosine-sine) decomposition reveals close relationships between the singular value decompositions (SVDs) of the blocks an orthogonal matrix expressed in block form. In full generality, it applies when the diagonal blocks are not necessarily square. We focus here mainly on the most practically important case of square diagonal blocks.

Let be orthogonal and suppose that

is even and

is partitioned into four equally sized blocks:

Then there exist orthogonal matrices such that

where and

with

,

, and

for all

. This CS decomposition comprises four SVDs:

(Strictly speaking, for we need to move the minus sign from

to

or

to obtain an SVD.) The orthogonality ensures that there are only four different singular vector matrices instead of eight, and it makes the singular values of the blocks closely linked. We also obtain SVDs of four cross products of the blocks:

, etc.

Note that for , the CS decomposition reduces to the fact that any

orthogonal matrix is of the form

(a rotation ) up to multiplication of a row or column by

.

A consequence of the decomposition is that and

have the same 2-norms and Frobenius norms, as do their inverses if they are nonsingular. The same is true for

and

.

Now we drop the requirement that is even and consider diagonal blocks of different sizes:

The CS decomposition now has the form

with ,

,

, and

, and

and

(both now

), having the same properties as before. The new feature for

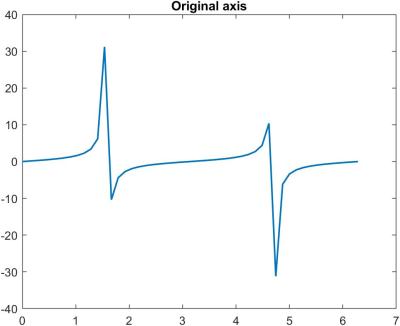

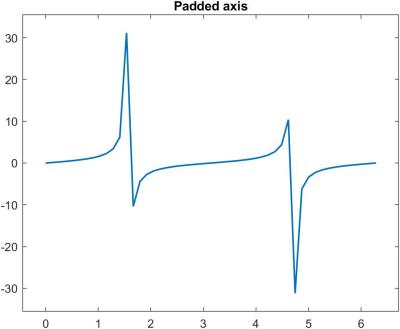

is the identity matrix in the bottom right-hand corner on the right-hand side. Here is an example with

and

, with elements shown to two decimal places:

We mention two interesting consequences of the CS decomposition.

- With

: if

then

is singular.

- For unequally sized diagonal blocks it is no longer always true that

and

have the same norms, but their inverses do:

. When

, this relation becomes

.

The CS decomposition also exists for a rectangular matrix with orthonormal columns,

Now the decomposition takes the form

where ,

, and

are orthogonal and

and

have the same form as before except that they are rectangular.

The most general form of the CS decomposition is for an orthogonal matrix with diagonal blocks that are not square. Now the matrix on the right-hand side has a more complicated block structure (see the references for details).

The CS decomposition arises in measuring angles and distances between subspaces. These are defined in terms of the orthogonal projectors onto the subspaces, so singular values of orthonormal matrices naturally arise.

Software for computing the CS decomposition is available in LAPACK, based on an algorithm of Sutton (2009). We used a MATLAB interface to it, available on MathWorks File Exchange, for the numerical example. Note that the output of this code is not quite in the form in which we have presented the decomposition, so some post-processing is required to achieve it.

References

This is a minimal set of references, which contain further useful references within.

- Gene Golub and Charles F. Van Loan, Matrix Computations, fourth edition, Johns Hopkins University Press, Baltimore, MD, USA, 2013.

- C. C. Paige and M. Wei, History and Generality of the CS Decomposition, Linear Algebra Appl. 208/209, 303–326, 1994.

- Brian Sutton, Computing the Complete CS Decomposition, Numer. Algorithms 50(1), 33–65, 2009.

Related Blog Posts

This article is part of the “What Is” series, available from https://nhigham.com/category/what-is and in PDF form from the GitHub repository https://github.com/higham/what-is.